Tutorial: Introduction to eye and audio behavior computing for affect analysis in wearable contexts

This tutorial is held in 2023 International Conference on Affective Computing and Intelligent Interaction (ACII) at the MIT Media Lab in Cambridge, MA, USA on 10 Sept. 2023. Students and early-stage researchers in any field of study who have used or wish to use eye or speech behaviour, multimodality and/or wearables in their projects are the primary target audience. A basic machine learning/pattern recognition knowledge is a prerequisite. The tutorial content covers a wide range of topics, from general concepts to more detailed mathematical and technical aspects, benefiting both non-technical and technical audiences. This tutorial will offer fresh insights to those looking to explore this emerging field and also be valuable to researchers with experience in eye and speech behaviour or multimodal affective computing.

Tutorial: Introduction to eye and speech behavior computing for affect analysis in wearable contexts

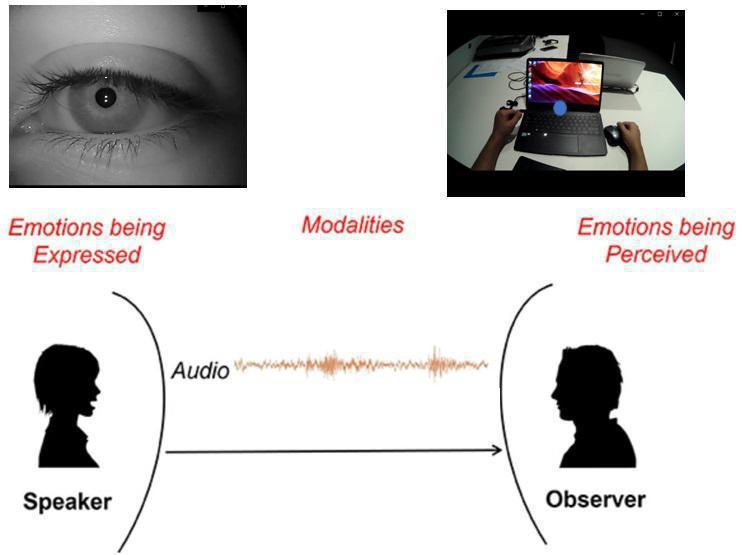

This tutorial is held in the 25th ACM International Conference on Multimodal Interaction (ICMI) at Sorbonne University, Campus Pierre & Marie Curie on 13 Oct. 2023. It is designed to introduce the foundational computing methods for analysing close-up infrared eye images and speech/audio signals from body-worn sensors, the theoretical (e.g. psychophysiological) basis for the relationship between sensing modalities and affect, and the latest development of models for affect analysis. It will cover eye and speech/audio behaviour analysis, statistical modelling and machine learning pipelines, as well as multimodal systems centred on eye and speech/audio behaviour for affect. Various application areas will be discussed, along with examples that illustrate the potential, challenges, and pitfalls of methodologies in wearable contexts.

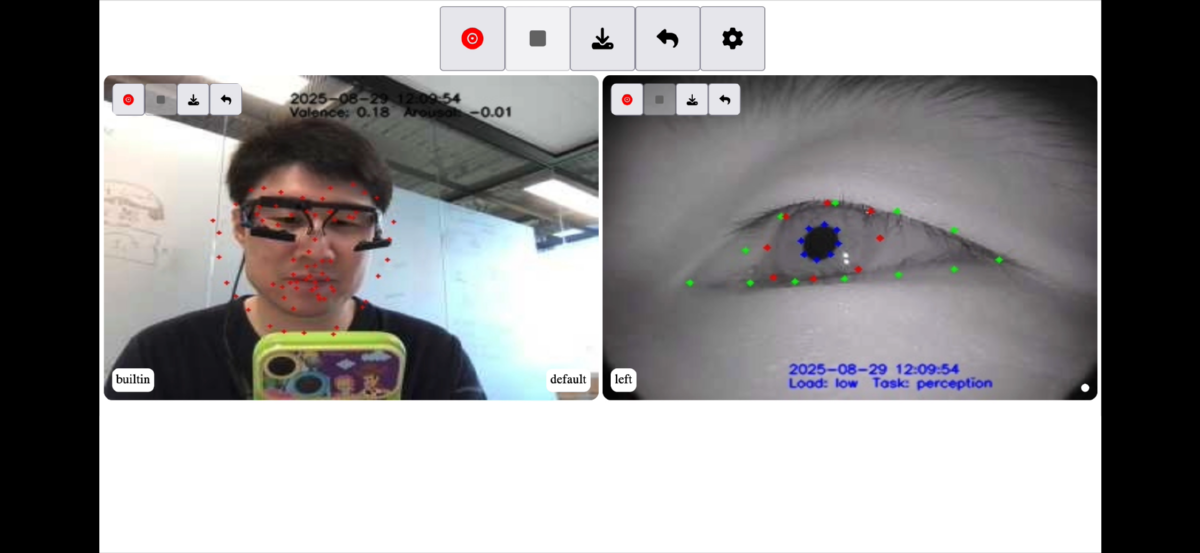

Open-access dataset: IREye4Task

IREYE4TASK is an open-access dataset for wearable eye landmark detection (computer vision) and mental state analysis (signal processing and affective computing). It contains annotated landmarks on the eyelid, pupil and iris boundary on each frame from 20 partcipants' eye videos (over more than a million frames) as responses to four different task contexts (cognitve, perceputal, physical and communicative task) and two load levels of tasks, and the task context groundtruth in high level of granularity.

It is free to download and is for research use only.